Imagine Ana, enthusiastic and confident, submits her application for a role that seems tailor-made for her. Yet, her high hopes are dashed when she receives a generic rejection email days later. Sound familiar? In the current job market, the candidate experience is often marred by challenges such as opaque and biased selection processes, sporadic or nonexistent updates (e.g. ghosting) on applications, and inefficient communication.

Enter Eve, a recruiter facing the tidal wave of applications for the position Ana aspired to. Week after week, Eve grapples with the deluge, deploying a mishmash of strategies to efficiently sift through resumes to identify qualified candidates, schedule, and coordinate interviews.

In an era where a single vacancy can attract thousands of applicants within hours, identifying a candidate who will perform well in the role becomes daunting. LinkedIn illustrates this vividly: a Product Manager position at Doordash attracted 1,484 applicants in a week, while Microsoft’s Sales Strategy Enablement Lead role saw 812 candidates in just five days. This inefficiency spells frustration for both candidates and recruiters, highlighting a pressing need for a system overhaul to deliver a more effective and rewarding recruitment experience.

How AI Can Enhance the Recruitment Experience Immediately

AI holds vast potential to refine the recruiting process. Below, we explore a few ways where AI can make significant enhancements right away:

Going Beyond Keywords

Many organizations use AI algorithms to scan resumes for keywords. However, the true potential of AI lies in Natural Language Processing (NLP). Advanced NLP enables AI to grasp the context and meaning of resume content, improving accuracy in matching candidates to job requirements. Moreover, AI can perform semantic analysis on resumes and job descriptions, identifying relevant skills and experiences even if expressed differently. Eightfold.ai and moonhub.ai provide solutions going beyond keywords and resumes to find the best-qualified candidates.

Candidates also benefit immensely. With NLP and Semantic Analysis handling resume screening, they no longer need to cram their resumes with keywords or dedicate excessive time to formatting. Instead, they can prioritize showcasing their skills and experiences. AI-powered resume screening can also help mitigate unconscious biases by focusing solely on candidate qualifications, eliminating factors such as name, gender, ethnicity, or graduation year from the initial screening process. Pinpoint uses blind screening to reduce bias early in the recruitment phase.

Streamlining Communication and Low-value Tasks

Recruiters’ core objectives include finding the best-qualified candidates and converting them into employees. Tasks such as getting candidate availability, scheduling and rescheduling, responding to questions, and providing regular updates are necessary but tedious and time-consuming. The good news is that almost all these tasks are easily automatable, and several companies already offer solutions.

Paradox conversational AI product–used by companies like Disney–leverages chatbots to respond to candidate questions, schedule interviews, and send reminders. Otter.ai is fairly effective at capturing and summarizing candidate meeting notes. This is particularly helpful in highlighting key takeaways when following up with candidates or during interviewer debrief sessions.

Making Interview Evaluations More Objective

Ensuring objective interview evaluations extends beyond using standardized candidate assessment criteria.

Objective Scoring: AI algorithms can give objective scores to recorded candidate responses against predefined evaluation criteria. These scores can be compared to the interviewers’ own evaluation, flagging any significant discrepancies as potential bias.

Non-Verbal Behavior Recognition: AI can recognize patterns in interviewers’ non-verbal communication, such as facial expressions, body language, and tone of voice. By comparing these against established benchmarks, AI can alert to any deviations indicating bias. Similarly, AI can also assess the consistency of non-verbal behavior across interviews, detecting and addressing any biases affecting evaluations.

Companies like HireVue offer a platform to conduct video interviews and evaluate candidates for non-verbal behavior. Expanding these platforms to coach and assess interviewers can further enhance objectivity and fairness in the interview process.

Looking To The Future

What are some ways emerging generative AI technology may transform recruitment in the future? Here are a couple of thoughts:

Recruiters become AI fine-tuners

Recruiting is iterative, and priorities adapt to new information based on interviews, available candidate pool, urgency, and other considerations. Looking beyond general automation, recruiters may soon be able to integrate these insights to customize generative AI tools into role-specific ones. Recruiters will be able to effectively train their own ML models optimized to their needs using fine-tuning techniques such as in-context prompting, retrieval-augmented generation (RAG), and reinforcement learning from human feedback (RLHF). Through a conversational interface such as chatbots, recruiters can refine screening criteria and context to be used in future prompts. As company mission, strategy, recruitment, and compensation policies are developed, RAG techniques can automatically incorporate this information (documents, videos, or other media) into the models powering recruiter workflows. To further refine, tools can utilize RLHF to allow recruiters to provide feedback on predictions that train a reward model, which in turn gives feedback to the core LLM model in future training runs. Note: Methods like RLHF are complex and costly, so they would not fit all use cases today.

Given the massive economic opportunity, many well-funded foundational AI startups (i.e. OpenAI, Anthropic) and almost all large tech companies (i.e. Microsoft, Google) are working on various types of customizable AI platforms for the enterprise. Given the importance of talent, we expect that recruiting will be a priority application.

Better Explanations = Better Outcomes

As a candidate, how often have you experienced rejection during screening without explanation? With more automation, this poor experience results in a lack of trust and fear of underlying bias–prompting jurisdictions like New York City to pass laws regulating automated employment decision tools. While we covered how AI can do faster and less biased screening, it may soon be able to automatically provide the recruiter and candidate with plain-speak rationale for its decision.

Explainability refers to an emerging set of approaches that make it possible for humans to understand and trust AI predictions–it describes how a model arrived at a particular result. One area focuses on training data, employing analysis and validation for higher confidence in the features that a model considers. Another area focuses on models –by selecting those that are easier to interpret (often because they are simpler) or using mathematical methods to analyze intermediate layers of the neural network that lead to the final prediction. In combination, these areas yield models more easily understood by computer scientists and, soon enough, non-technical users.

In a world where explainability is imbued into tools, recruiters and candidates will provide more transparency to each other (at scale!) when communicating decisions. Companies will have the opportunity to improve the candidate experience, steering candidates to other roles that are better fit earlier and demonstrating how they are meeting emerging laws around bias and transparency for automated recruitment tools.

Challenges

Here we explore technical and ethical challenges in using AI for recruiting.

Technical Challenge: LLMs and Graphs are Disconnected

When applying LLMs to recruiting, let’s examine the structures underlying recruiting data, their representation in computer science, and why LLMs are disconnected from them. Recruiting data structures are similar to social media apps, where all data is hyper-connected. Your connections have, in turn, their network of connections, some of whom work at the hiring manager’s employer or are one skip away. Employers identify skills required for jobs, and the graph of skills across billions of eligible candidates is even larger. All this data is represented in “graphs”. The graph mathematical concept describes objects or nodes, and relationships are represented as connections between nodes. Graphs consist of nodes, each instance of which is an object. Connections between two nodes are called edges, which indicate a relationship between two nodes. Graphs are how websites connect to each other through hyperlinks. Graphs are also how Facebook decides to deliver content from one person to another person’s News Feed (using Tao).

Using LLMs to work on graph data is complex, and no technology exists to connect them scalably. LLMs work on natural language, images, video, and relational data, but graphs add many nodes, edges, and dependencies. People-based apps’ data grows rapidly. Neuronn AI’s team is from Meta, where its logging for AI adds 100 TB of data… every second. Retraining an LLM’s ML model with such enormous data requires systems, computing, and reliability at scale. Fine-tuning an LLM at runtime, i.e. in inference, isn’t feasible for planet-scale data. A rethinking of deep tech is needed for LLMs, search and retrieval, and graphs. Only then can AI scale up relationship discovery, candidate pinpointing, matching skills, and predicting placements.

Google researchers have a research draft of “Talk like a Graph: Encoding Graphs for Large Language Models,” yet this is a fraction of the tech needed. Assuming that technologists will find a solution, let’s look toward the future of using generative AI in recruiting.

Ethical Challenges

Implementing AI in recruitment brings about ethical considerations, such as bias, transparency, and compliance. The reliance on historical data for AI training poses a risk of perpetuating biases, underscoring the importance of transparent algorithms to effectively mitigate such biases. Compliance with legal standards is crucial, particularly regarding data privacy and consent from candidates for AI usage. However, the depersonalization of the recruitment process and overreliance on automation present challenges. These include the risk of losing the human touch and neglecting human judgment, which could adversely affect candidate experience and recruitment outcomes.

Exploring a Value Sensitive Design approach, which emphasizes incorporating human values into technology design, offers the potential to develop AI-driven recruitment tools that consider ethical norms and prioritize human-centric values. By engaging stakeholders in the design phase, actively seeking to identify and mitigate biases, striving for transparency, and finding a harmonious balance between AI functionality and human discernment, organizations might create recruitment systems that aspire to achieve both efficiency and innovation, while also respecting the dignity and rights of participants. This approach suggests a path forward where AI in recruitment could enrich the hiring landscape, promoting inclusivity, fairness, and respect for all involved.

Connecting Companies With The Right AI Solutions

At Neuronn, we are connecting companies evaluating AI for talent management and startups serving this space. If you are interested in exploring AI-driven talent acquisition tools for your business or are building new AI products for this space, please contact the Neuronn AI team at vik@neuronn.ai.

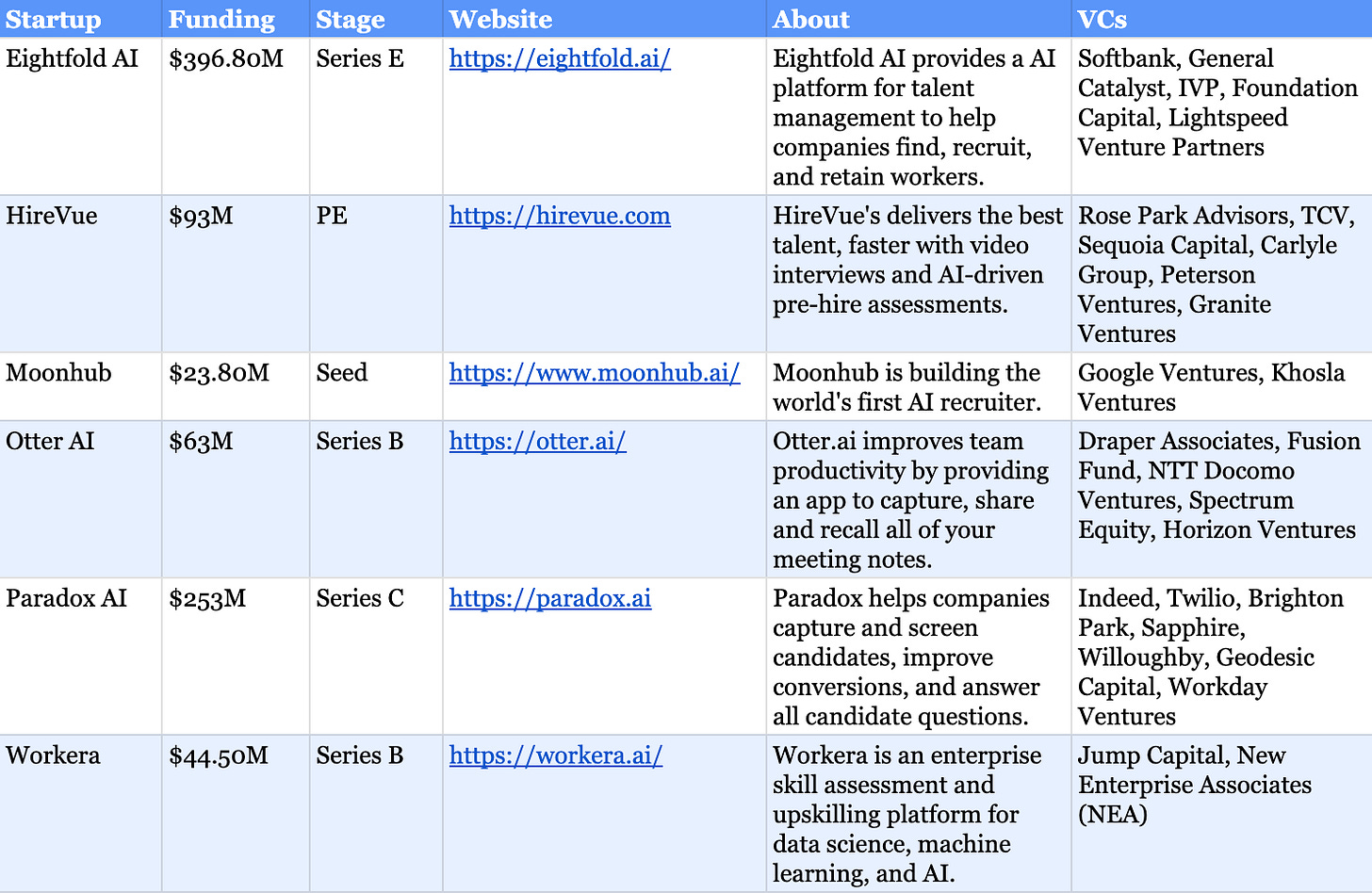

AI startups mentioned in this article:

Eightfold.ai HireVue Moonhub Otter AI Paradox Workera

About Neuronn AI

Neuronn AI is a professional advisory group rooted in prominent Artificial Intelligence companies such as Meta. Leveraging extensive expertise in Data Science & Data Engineering, AI Product Management, Marketing Research, Strategic and Brand Marketing, Finance, and Program Management, we offer fractional assistance to startups looking to launch AI-driven product ideas and provide consulting services to enterprises seeking to implement AI to accelerate their outcomes. Neuronn AI’s advisors include Alex Kalinin, Carlos F. Romero, Enrique Ortiz, Karen Trachtenberg, Lacey Olsen, Norman Lee, Owen Nwanze-Obaseki, Rick Gupta, Seda Palaz Pazarbasi, Sid Palani and Vik Chaudhary.

Leave a Reply